Every time technology changes, trust has to change with it.

The evolution of trust

In the 1950s, trust was personal. You went to your bank, and Martha at First National shook your hand. That handshake was enough.

In the 1990s, trust went online. At first, people said "Never type your credit card into the internet." It felt crazy. Then PayPal appeared. They didn't invent payments. They invented trust for online payments. They took on fraud and chargebacks so people would feel safe. That is why e-commerce worked.

In the 2010s, trust went mobile. Banking on your phone sounded ridiculous. But apps, biometrics, and 2FA made it safe. Now no one thinks twice.

And in 2024, AI can drive cars, diagnose cancer, and write code. But the moment you ask it to move money, even $500, everyone panics.

Why AI and banking don't mix

Because banks were built for deterministic systems. Press button A, get result B. AI is probabilistic. It interprets, it infers, it adapts.

When you connect the two directly, you get a loop:

If you make AI helpful, it becomes risky.

If you make AI safe, it becomes useless.

This is the core problem.

From the bank's perspective, it is obvious. They want to modernize. So they bolt an AI assistant onto their app. They expose a transfer API.

On the surface, it looks like progress. But what they have really done is connect their core ledger to a machine that does not think in fixed instructions.

That is the part people underestimate. An API call is simple. A request from an AI is not.

When an AI says "Send $500 to John," it is not the same as POST /transfer. It is the product of a conversation. It may depend on context, memory, or ambiguity in language. It may even have been influenced by a malicious prompt.

So of course you are not going to let an AI agent hit your transfer API directly. That would be insane.

The missing layer

You need something in between.

That something has to understand both sides.

It has to understand AI intent: that outputs are probabilistic, that "largest bill" could mean different things, that "John" might not be unique, that context matters.

And it has to understand banking requirements: that every transfer needs authentication, authorization, fraud checks, compliance, and an audit trail.

AI and banking speak different languages. Without translation, nothing works.

This is why a new layer has to exist.

Not rails. The rails are already there: ACH, wires, cards, crypto.

Not a thin wrapper. That just exposes the same risk.

A layer that can translate intent into safe execution. That can take a fuzzy request from an AI and turn it into a validated, compliant, reversible instruction a bank can accept. That can trace the conversation behind the request. That can enforce policies and patterns before money moves.

Without this layer, banks cannot trust AI. And if banks cannot trust AI, then AI cannot act. It will be stuck analyzing and recommending, but never performing.

With this layer, banks get the safety they require, and AI gets the ability to complete the loop from intent to outcome.

Every shift needs its infrastructure

Every major shift in technology needed its trust infrastructure.

- E-commerce had PayPal.

- Apps had Stripe.

- Cloud had IAM and compliance.

AI will have this as well.

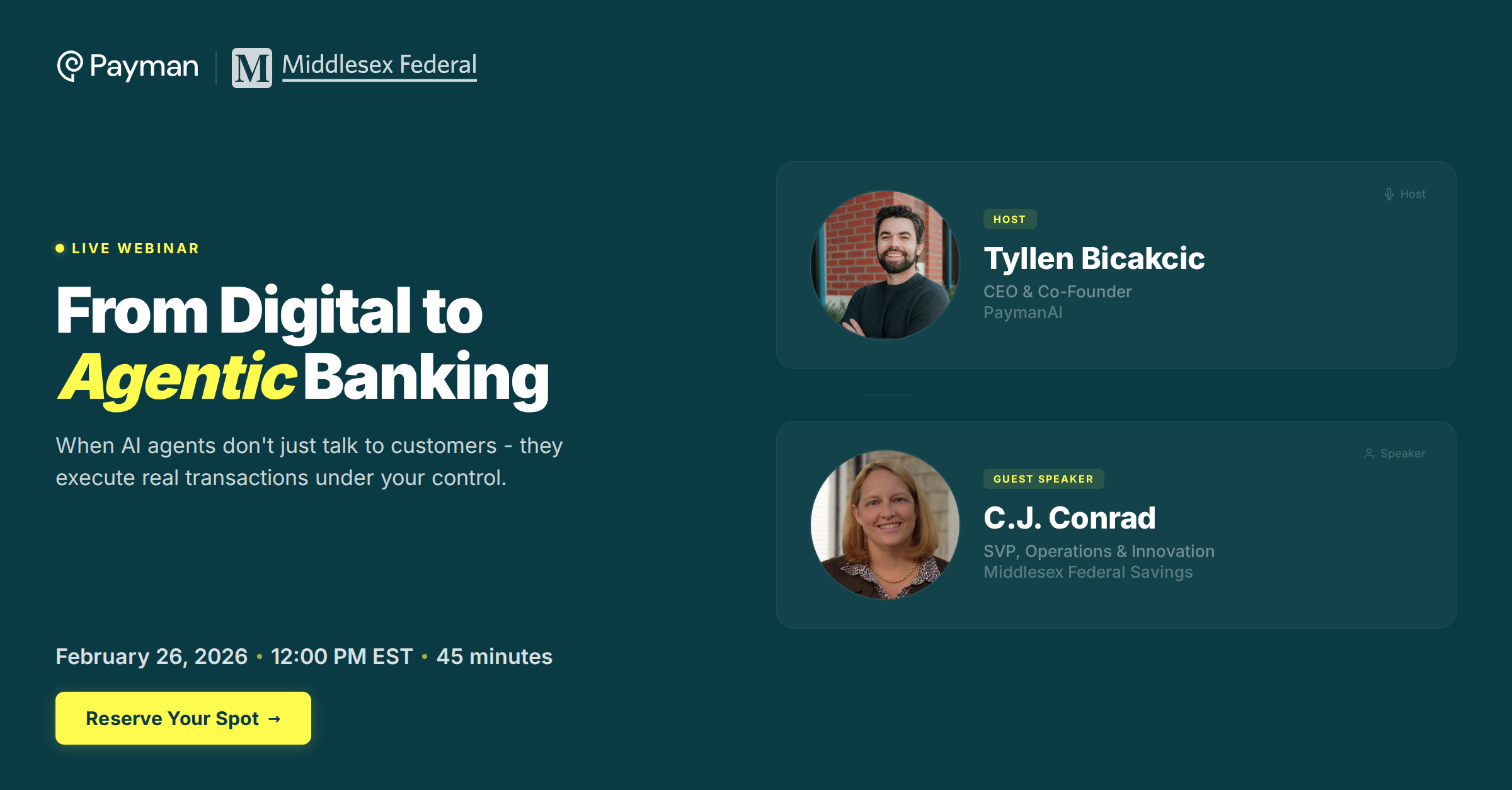

That is what we are building with Payman.