The Conversation That Happens in Every Boardroom

I have been in enough bank security conversations to know how they go. The CTO is cautiously optimistic. The CISO has a list of concerns. The Chief Risk Officer is doing mental math on regulatory exposure. And somewhere in the room, someone from compliance is already drafting objections.

The conversation always starts the same way:

"We are interested in AI agents for banking, but..."

That "but" contains multitudes.

But what if it makes a mistake? But what if it gets hacked? But what if an employee misuses it? But what will the regulators say?

Strip away the specific objections, though, and you find that every concern reduces to two fundamental questions:

- "How can I sleep at night?"

- "What if an employee goes rogue?"

These are not really questions about AI. They are questions about trust, control, and accountability. The same questions banks have been asking about every new technology since the telegraph.

Question 1: "How Can I Sleep at Night?"

This question sounds emotional, but it is actually deeply rational. The person asking it is responsible for systems that move real money, often billions of dollars daily. They have spent their career building controls, passing audits, and maintaining the kind of operational discipline that keeps regulators happy and customers safe.

When they ask "how can I sleep at night?", they are really asking:

"Can I maintain the same level of control I have today?"

The Philosophy of Control in Banking

Banking security has always been about layered controls. No single point of failure. No single person with unlimited access. Every action checked, logged, and reversible.

This is not paranoia. It is hard-won wisdom from centuries of financial disasters. The banker mantra is "trust, but verify." Or more accurately: "verify, then verify again, then maybe trust a little."

AI agents trigger alarm bells because they feel like they might bypass these layers. An AI that can initiate transactions sounds like giving someone a master key. And in banking, master keys do not exist for good reason.

The Answer: AI Operates Within Your Control Framework

Here is the counterintuitive truth: a well-designed AI agent is more controllable than a human employee.

When Payman integrates with a bank's core systems, the AI agent operates within explicitly defined boundaries:

- Transactions below $X? Proceed automatically.

- Transactions above $X? Require human approval.

- New payees? Trigger verification workflows.

- Unusual patterns? Flag and pause.

These are not suggestions. They are hard constraints enforced at the API level. The AI literally cannot bypass them because the underlying systems will not allow it.

Compare this to a human employee, who might: get busy and skip a verification step, make a judgment call that bends the rules, forget to log a transaction, or be socially engineered into an exception.

The AI does not get busy. It does not make judgment calls outside its parameters. It does not forget. And it cannot be socially engineered because it has no social context to manipulate.

Step-Up Authentication: The AI Participates in Security

Banks worry about AI bypassing MFA and OTP flows. But AI agents can participate in these flows:

- AI initiates the request

- System flags it as requiring step-up auth

- Human receives OTP/MFA challenge

- Human approves (or does not)

- AI proceeds (or does not) based on human authorization

The AI is not bypassing your security controls. It is using them.

Real-Time Monitoring: Visibility You Have Never Had

Every action an AI agent takes is logged with microsecond precision: what was requested, who initiated it, what context triggered it, what the outcome was.

This is not just an audit trail. It is a complete operational record. Your compliance team can replay the AI decision-making process step by step. Try getting that level of visibility from a human employee making decisions based on experience and intuition.

Question 2: "What If an Employee Goes Rogue?"

This question reveals a deeper truth about banking security: the biggest risks often come from inside.

Every bank has stories. The trader who hid losses until they became catastrophic. The branch manager who approved fraudulent loans. The IT administrator who sold customer data.

Internal threats are not theoretical. They are a constant operational reality.

The Accountability Problem

Here is the challenge with human employees: they are autonomous agents operating with judgment and discretion. That is what makes them valuable, but it is also what makes them risky.

A human employee can: make decisions without documenting their reasoning, build relationships that lead to trust being extended inappropriately, gradually expand their access through institutional knowledge, and hide mistakes or malfeasance in the complexity of daily operations.

Detecting rogue behavior requires pattern analysis, tip-offs, or luck.

The Answer: AI Creates Accountability Infrastructure

Immutable Audit Trails: Every action an AI agent takes is logged to an immutable audit system with timestamp, action, amount, user, approval chain, context, risk score, and outcome.

This is not just logging. It is forensic-grade evidence. If something goes wrong, you can reconstruct exactly what happened. You cannot gaslight an audit log.

Scoped Permissions: The AI agent operates with the permissions of the user who invokes it, not with elevated privileges. If a teller-level employee tries to use the AI to initiate a large wire transfer, it fails. Not because the AI refuses, but because that employee does not have authorization.

The AI does not create new attack surface. It operates through your existing access control framework.

The Detection Advantage: Here is the real insight: AI makes rogue behavior easier to detect, not harder.

Because every AI action is logged with full context, anomaly detection becomes dramatically more effective. Human behavior is noisy and inconsistent. AI behavior is precise and predictable. Deviations stand out.

The Deeper Truth: AI Extends Human Judgment

Both questions reflect a fundamental anxiety: that AI represents a loss of human control. But the reality is more nuanced.

AI agents make tactical decisions within strategic constraints.

Yes, AI agents are autonomous and can decide how to achieve a customer intent. They can break down "transfer $500 to savings" into the specific API calls, verification steps, and confirmations needed. They can decide the sequence of actions, handle edge cases, and adapt to different scenarios.

But the strategic decisions remain human: which workflows to enable, what transaction thresholds to set, which approvals to require, what monitoring to implement, and what the AI is allowed to do at all.

Think of an AI agent like an extremely skilled specialist who can handle complex procedures independently within their domain. They can process transactions faster than any human, follow intricate workflows without errors, maintain perfect documentation, and operate 24/7.

But they operate within Payman's constraint framework. They cannot override programmed limits, hide their actions, or expand their scope beyond what the bank has authorized.

The human remains in control. The AI just makes that control more effective.

The Competitive Reality

Banks that move first on AI are not taking risks with customer funds. They are recognizing an important truth: the question is not whether AI is safe enough for banking. It is whether traditional banking is efficient enough for the AI era.

The banks winning right now are the ones that figured out:

- AI agents can be more controlled than human processes

- Perfect audit trails are a feature, not a bug

- Step-up authentication works with AI, not against it

- The real risk is not AI going rogue. It is falling behind competitors who figured this out first

The two questions - "how can I sleep at night?" and "what if someone goes rogue?" - have good answers. The real question is how long you can afford to wait while your competitors implement them.

What Comes Next

This is Part 1 of our series on AI banking security. Coming up:

- Part 2: Building Your AI Governance Framework

- Part 3: The Regulatory Landscape

- Part 4: Implementation Playbook

Want to see how this works with your specific systems? Contact our team for a technical walkthrough.

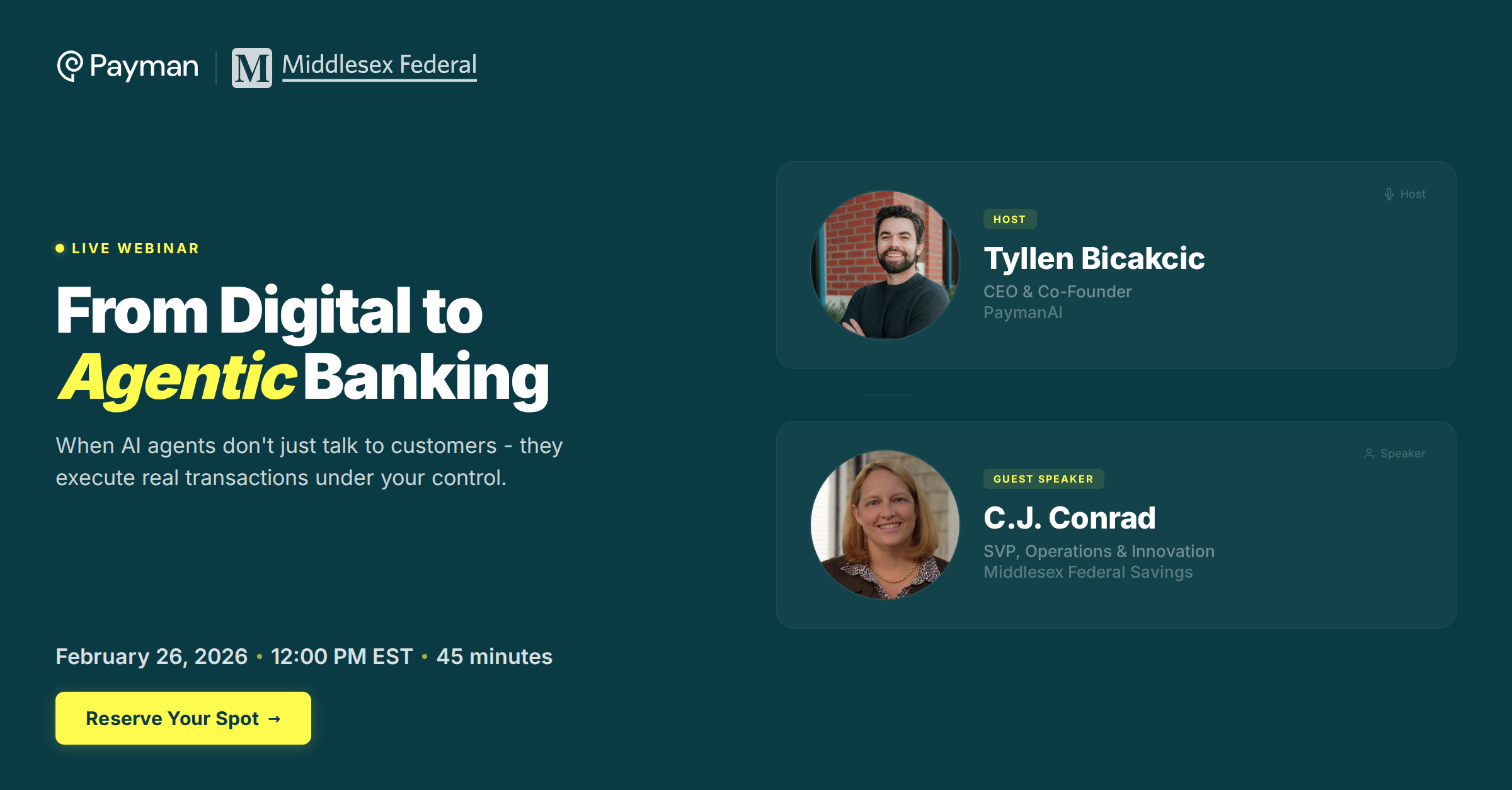

Payman is building the infrastructure that lets AI agents handle real money. We work with banks, fintechs, and enterprises to enable secure, auditable, controlled AI operations. If the future of banking is going to include AI, it should include AI that makes bankers sleep better, not worse.