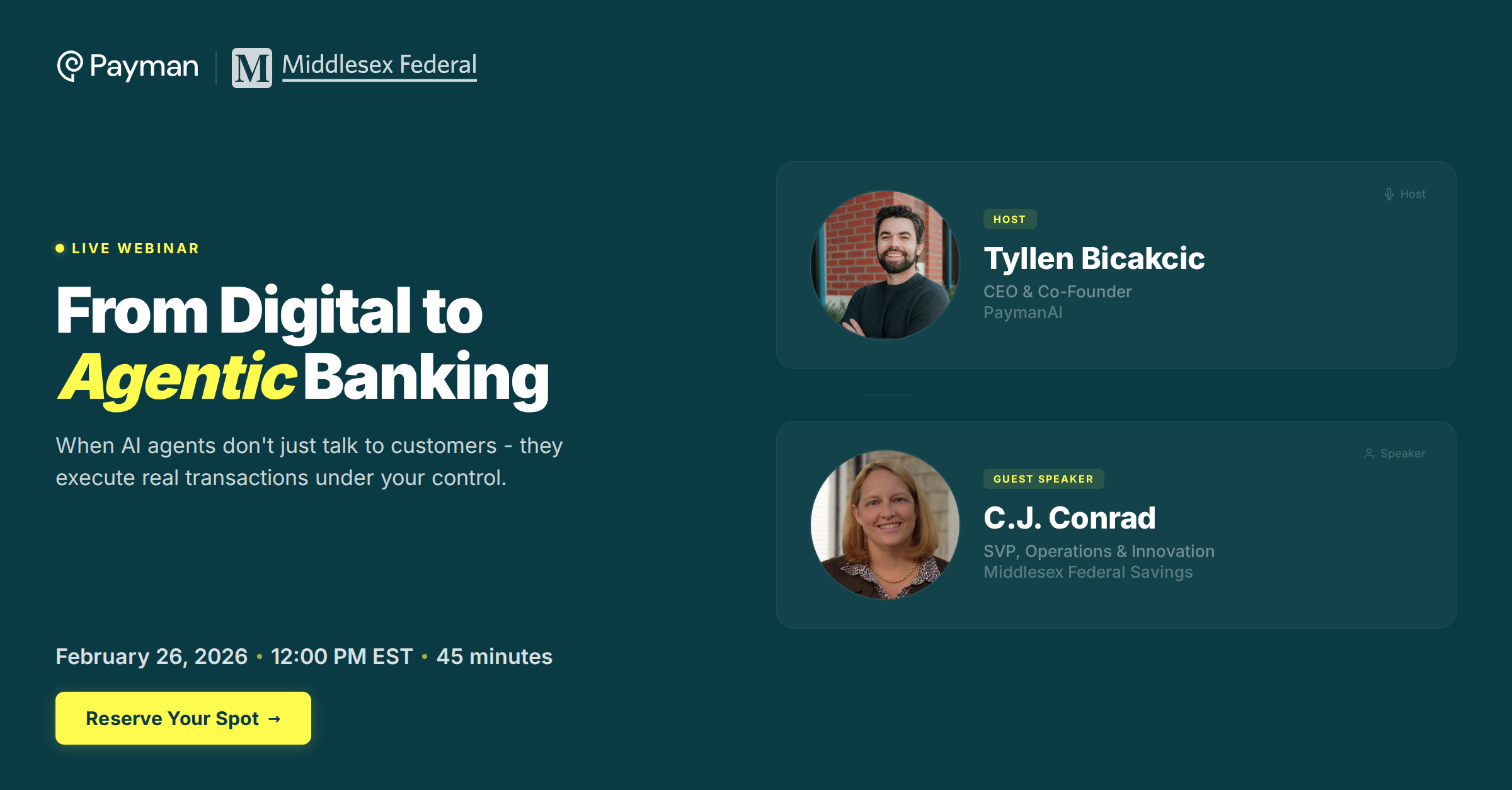

Tyllen Bicakcic, CEO & Co-Founder of Payman, sat down with Rocky Yu, Founder & CEO of AGI House, to unpack "How do you trust AI to move money safely and reliably" and what it takes to move from demos to dependable, enterprise-grade systems.

Key Highlights

Tyllen's intro: Payman is building the trust layer between AI and money so agents can move funds safely. ~18 months in, they've raised $13M backed by Visa, Coinbase, and Circle, and are working with banks on agentic money movement.

Milestone from the session: In January, Payman powered what Tyllen called the first ACH transaction by an AI agent in San Francisco—the same city where the first human ACH happened. Since then, the focus has been bank-grade reliability at scale.

Core Gap

Finance APIs assume deterministic clicks; AI is probabilistic and chooses when to call tools. Payments can't be left to chance—so you need guardrails, policies, and clear responsibility around how agents interact with APIs.

Why Developers Stall

Building money guardrails drains time from products. Teams fear an agent "sending money on behalf of someone," so they stop. Payman abstracts the heavy lift so builders inherit authorization flows and safe defaults.

First-Layer Guardrails

Who authorizes an action, the delegator's policies, spend limits, approved payees, and what data the agent can share (like account info). Granular permissions are required for real usage.

Second-Layer Protections

Monitor prompt and conversation history to catch prompt injection and malicious intent. Maintain a ledger and auditability so developers and enterprises can review how and why an agent acted.

Enterprise Controls

A deployment "control center" with audit logs for legal, kill switches to halt agents, version control for agent updates, scoped permissions (read vs write), and user management to revoke access when needed.

Implementation Stance

Don't give agents direct bank account access. Insert a middle layer with strict controls. Speak the bank's language, show how the AI behaves, and provide hosting options (including on-prem) to earn trust.

Compliance Reality

Teams asked about SOC 2/PCI alongside traditional and blockchain rails. The message from the session—run this as a layer on top of existing systems so it fits current controls rather than forcing a rip-and-replace.

Regulatory Lesson Learned

Giving an agent its own bank account pooled user funds and drifted into money-transmitter territory. The pivot—build where deposits and trust already exist to keep compliance straightforward.

Stablecoins

Useful for international reach via U.S. bank partners without integrating every foreign bank on day one. Over time, partner locally where preferred rails and liquidity already exist; trust comes first, then speed.

Human + AI

Tyllen built Payman so "AIs pay people." Capital becomes the incentive for collaboration. Vision examples—agents rebalance checking/savings, request vendor details, and pay your nanny so you don't think about money.

Multi-Agent Future

Enterprises won't run one agent but many to "complete intent." Payments need a specialist agent—Tyllen's term: a "pageant"—with extra guardrails and support for emerging protocols as part of the system.

Takeaway

Mitigate risk end-to-end, then grant autonomy. With authorization, policy, monitoring, and enterprise controls in place, AI agents can safely move money—turning experimental tools into production systems that pay people for valuable work.